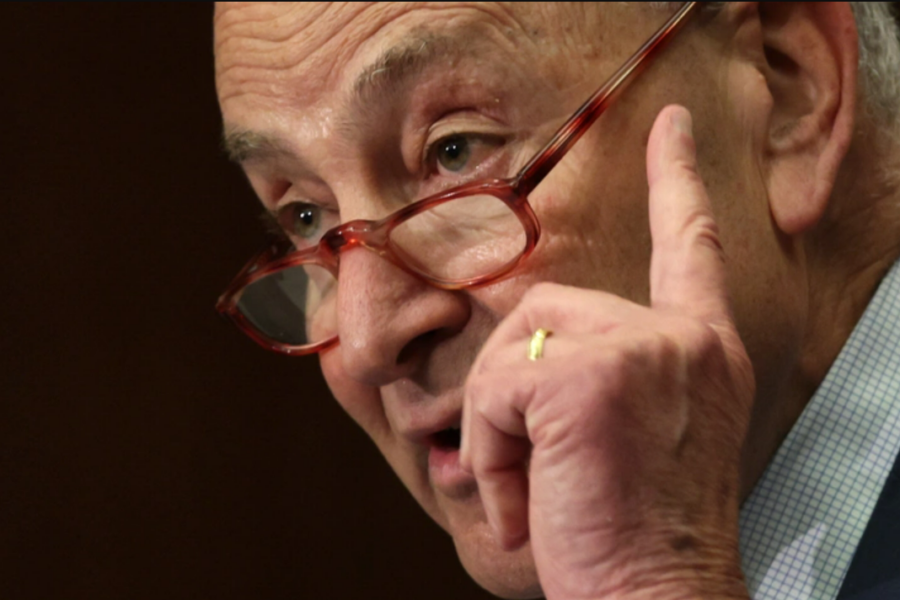

Sen. Schumer’s ‘ambitious’ new approach to AI regulation

U.S. Senate Majority Leader Chuck Schumer is proposing a new way to regulate AI since it is “unlike anything Congress has dealt with before.”

In a speech at the Center for Strategic and International Studies, the New York senator said he will not take the typical approach of holding congressional hearings that feature opening statements and 5-minute spurts of Q&A from each legislator to query on different issues.

“By the time we act, AI will have evolved into something new,” Schumer said. “This will not do. A new approach is required.”

While AI holds much promise in transforming life for the better, there are “real dangers” too of lost jobs, new weaponry, misinformation and others. As such, Congress has “no choice” but to acknowledge its impact, he said. “We ignore them at our own peril,” Schumer said. “With AI we cannot be ostriches sticking our heads in the sand.”

Having published a proposed framework in April, Schumer added a two-part proposal to the plan.

First, he is proposing the creation of a congressional framework for action called the SAFE Innovation Framework for AI Policy to support innovation while also adding guardrails. Schumer argued that without guardrails, AI innovation will be stifled or halted because of its risks.

This framework would seek to answer the following questions:

-

What is the proper balance between collaboration and competition among the entities developing AI?

-

How much federal intervention on the tax side and on the spending side must there be?

-

Is federal intervention to encourage innovation necessary at all?

-

Or should we just let the private sector develop on its own?

-

What is the proper balance between private AI systems and open AI systems?

-

How do we ensure innovation and competition is open to everyone, not just the few, big, powerful companies?

Schumer said that “without making clear that certain practices should be out of bounds, we risk living in a total free-for-all, which nobody wants.”

Is AI SAFE?

The SAFE in the SAFE Innovation Framework stands for security, accountability, foundations protection and explainability.

To advance security, there must guardrails around AI advances such that nefarious groups cannot use them for “illicit and bad” pursuits. Security also refers to job security, particularly displacement of white-collar, knowledge workers by generative AI. Schumer said care must be taken to not further erode the fortunes of the middle class.

To boost accountability, AI technologies must be prevented from being used to do such things as tracking kids’ movements, exploiting people with addictions or financial problems, perpetuating racial biases in hiring, violating the IP rights of creators, and other harms.

To read the complete article, visit IoT World Today.