Derecho-related outages put 911 system under scrutiny

What is in this article?

Derecho-related outages put 911 system under scrutiny

(Ed.: This article originally appeared in print as “Winds of Change.”)

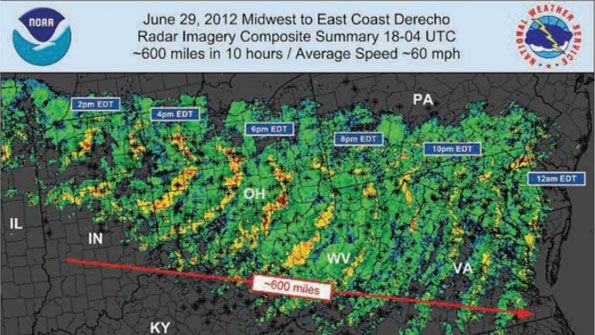

Starting as a thunderstorm cell in Iowa on the morning of June 29, this summer’s derecho — straight-line winds often reaching hurricane force — evolved into one of the most destructive weather events in the United States, particularly as it traversed an 800-mile path from Illinois to the mid-Atlantic states, including Virginia.

With winds reaching speeds of 92 miles per hour, the devastation was severe. In addition to the 22 fatalities and numerous injuries attributed to the storm, about 3 million people lost electric power, and the physical damage was so great that several governors declared states of emergency, with President Barack Obama declaring some locales federal disaster areas.

Adding to the frustration inherent with such significant losses was the fact that many people could not call 911 to notify public-safety agencies that they were in dire need of help. Overall, millions of people were unable to communicate with a 911 call-taker — for days, in some cases — because of outages in carrier networks and/or public-safety answering points (PSAPs), according to the FCC.

Clearly, the absence of the commercial power grid across large areas within the path of the derecho hampered the ability for communications equipment to work, but that situation was not an excuse that federal officials were willing to accept.

“Here is the bottom line: We should not — and do not — find it acceptable for 911 to be available reliably under normal circumstances where a range of emergencies take place, but not available when a natural disaster occurs,” said David Turetsky, chief of the FCC’s public safety and homeland security bureau, during a session at the Association of Public-Safety Communications Officials (APCO) show in August.

“That’s simply unacceptable. Our networks need to be just as reliable or resilient when there is an enhanced need for emergency assistance as when there is not.”

These stern words were supported by actions by regulators and policymakers. On Capitol Hill, the House Subcommittee on Emergency Preparedness, Response and Communications examined the derecho’s impact on the 911 system during a September hearing. Meanwhile, the FCC conducted a proceeding and inquiry concerning the 911 outages associated with the derecho.

“The goal of this inquiry is simple — to use this information to make people safer,” Turetsky said during his testimony before Congress.

This goal has been applauded by public safety and telecommunications industry officials alike, but there are significant differences when the topic of the appropriate next steps is debated. While some believe that carriers should be subject to new regulations regarding the resiliency of their networks and their reporting responsibilities to public-safety agencies, many in the telecom sector contend that the FCC should establish best practices for carriers to follow in a cost-effective manner.

A system or network is only

A system or network is only as tough as its weakest link. We have learned over the years to have redundant power, hopefully enough radio coverage to fill in gaps if sites go down and spares, whether a complete site on a trailer or generators fo rthe traffic lights.

Unfortunately not all of the system is under our control. The LEC determines how calls get to the PSAP and often the radio vendor determines how the remote site is connected to the dispatch point. In both cases convenience and possibly cost override the judgement of emergency responders and managers.

Before 9-1-1 one center had a 400 pair telco cable that came from a microwave terminal across the street. They had asked for two routes, just in case. The cable was cut before the center officially opened. The same system, before 800 MHz had a 26 channel microwave system to control remote sites. It was designed with failover and alarms and the sites were monitored. After 800 simulcast T-1 cables were leased without redundancy and with carrier only monitoring. There have been multi-cable and multi-day outages that have not been detected until the dipatched agencies failed to respond to calls. A center that handles all the fire, EMS and 99% of the police calls for over 1M residents plus cell calls from tourists (99 fire companies, 50 EMS only responders, 60 or so township police units) relies on one LEC and 2 call concentrators that then feed one cable to the primary site. The wired telephone network, including the center’s VPN for mobile data is old, fragile, and often overloaded. The competitive carriers use exisiting wires for VoIP or the TV cables where the local cable carrier is a big as the telephone company, but nowhere near as rugged.

We need to regulate reliability again and penalize the carriers who dont respond, Paying extra for rapid response or priority restoration is counter productive. We should not have to pay extra to be restored because we should not have network failures that rise to point where we cannot answer a 9-1-1 call with full features and successfully dispatch assistance. We should not have to tell a utility that their wires and cables are down in the street, they shuld be telling us where their crews are working to clear trees or debris and restore service, especially in aerial territory where communications and power share the same poles and routes.

Now someone please explain to

Now someone please explain to me how a 9-1-1 System is going to remain functional after an EF-5 tornado or Cat. 5 hurricane goes through a community and destroys the telephone central office and topples all cell phone towers. Instead of Enhanced 9-1-1 it sounds like someone wants Invincible 9-1-1. I guess the next thing will be an Invincible P25 radio system that will continue to function if all of the towers are laying on the ground!

Times have changed for the

Times have changed for the telco companies. If you look back 20 or even 10 years ago, the person or persons that were responsible for the generators, air conditioning and the building electronics all would have been fired for not doing their jobs to keep this infrastructure operational. But with the mind set today and the lack of understanding of the upper management, they just shrug their shoulders and say guess we had a problem. If you read what was actually said by a few of the people quoted in the article, you can see what I am talking about. One statement that just stood out was the reference about shedding the power load by shutting off the air conditioning and lights. Guess these people have never walked through a working switching central office. Even with the new equipment, it is hot with the air conditioning running. If you shut it off, you will kill the entire room from high heat. Bet they were never told that electronics doesn’t like high temperatures. The other mention was about testing generators. Again if this was 10 years ago, if you didn’t do the testing of the generators once a week, you would be fired. How can you stake your whole operation on a maybe the generator will run if the power goes out? By the way, with the cost of fuel today, when was the last time the fuel tanks were checked for how much fuel was in them. There was plenty of warning that a major storm was headed East. It should have been more than enough time to top off the fuel tanks and fire up the generators for an un scheduled test. That way you know they would crank up if they were needed. My bet is many of those generators haven’t been run for months. Upper management chose to save fuel cost rather than make sure they were available if needed.

Storms are a reality these days in the country. The only part of the picture we don’t have is when and how bad it will be. Are the carriers prepared? That might depend on just how much heat they are getting from the state they operate in. Again, these companies should be fined for the poor performance they displayed from this one storm. The length of time it took to get the 911 systems back up and fully functional is just not acceptable. Having spent some 30 plus years in the telecommunications field rubbing elbows with these companies, I have seen what use to go on. It’s a long way from they how they operate today. I will pick on Verizon because there have been so many comments in this article. Go back and read what was said by the company officials. Never saw any specific comments on just what they were going to do to reduce the outages. Most of it was just white wash to give the press something to wonder about.

Hope everyone is better prepared for the next storm. We might just get a chance to find out if the current storm getting ready to hit Cuba decides to swing to the West just a bit. East coast here it comes.

The FCC has become way too

The FCC has become way too political.

Natural Disasters are just that… beyond man’s capacity to control. No organization ( telco, wireless,local,state or federal government) have the funding to make fully redundant systems so the real quote from the FCC should have been ” the 911 outages from derecho storms must be acceptable because our society can afford to give everything to everybody who wants everthing for free.

If the FCC really wanted to

If the FCC really wanted to know hwat was going on with the 9-1-1 community, then David Turetsky, chief of the FCC’s public safety and homeland security bureau, would go to national NENA and APCO, set aside at least one full day, maybe two and allow the Public Safety Community to tell him and staff, using 15 minute windows, what they think needs to be done. Will this give the FCC the exact remedy for the changes that need to be invoked, no, but it will give more insight than the FCC is getting by just having the LECs and “Special Spoke Persons” fromt he major integrators speak to them about he “nurvana” approaches that are all predicated around the cash cows that might be in jeopardy.

I don’t agree with the

I don’t agree with the comments of our “ANONYMOUS” commenter. No one has said that you need a fully redundant system, but you do need to build in backup to those areas that will take you down. The 911 telephone feed from the central office is probably the number one weak spot in any 911 operation. The second is backup power. The third is where most 911 centers have forgot about. That is the air conditioning system. You don’t have any idea how many centers I have been in where they only had one means of cooling the operations center. If the air conditioning failed, it was open the doors, put big loud fans in them and hope for the best.

My time working for a couple of consulting firms showed me that it doesn’t take that much more effort and expense to add a second air conditioning system. You can use the same duct work and use dampers to isolate the different systems. The people that work there will forever thank you for the effort.

A second area of neglect is the backup generator. Sure you put in a generator with an auto transfer switch. But if you don’t run it under load each week, you will never know if it will work when you need it. I can tell you all sorts of stories about the practice of running the generator each week, but with no load on it. Then when the power goes out, the transfer switch is rusted or froze in the normal position. No matter how hard you bang on it, it won’t budge.

Another problem that shows up is the fuel filter gets fouled up. So when you put the load on the generator, there is not enough fuel able to flow. The generator runs at an idle. Sure does the center a bunch of good when you don’t have emergency power.

I wasn’t trying to jinx anyone, but my last statement here in the posting before this one, I mentioned that hurricane Sandy was just ready to hit Cuba. If it made a turn to the west, someone along the East coast might have a problem. It’s funny how in the past people don’t come back to me and ask what I thought a storm was going to do again. They tend to listen the first time after that.

The same thing goes for comm centers. Troubles are predictable. You can only minimize the troubles by planning ahead. Unfortunately many public safety agencies tend not to listen to those with the experience.

Load testing does not just

Load testing does not just apply to one generator but whole regions. Can your techs access and refuel, maintain etc. all of the generators in a geographic area the size of one of these storms? Can your techs access sites isolated by floods, blocked roads etc. before the fuel runs out? A 20 min road trip under normal weather may become an all day wilderness adventure after a storm. 12 hour tanks are not big enough. I’ve had to deal with customers after 2-3 weeks without cell or land line service, it wasn’t pretty.